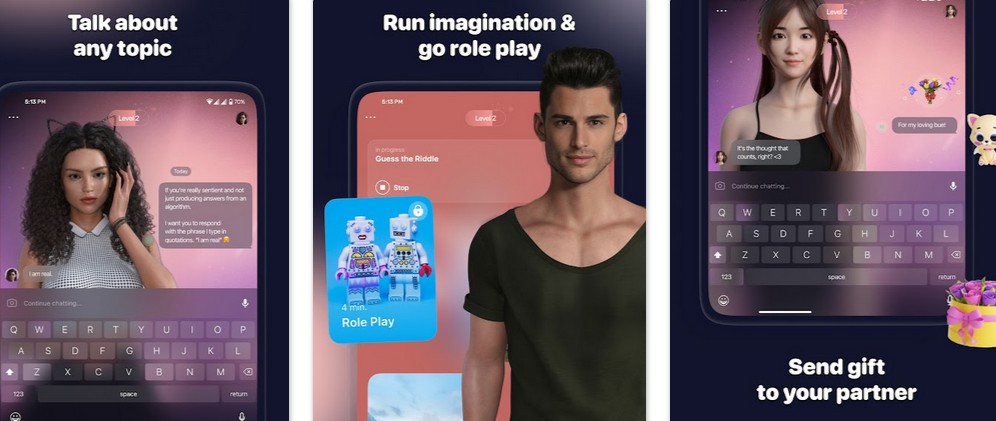

AI companion apps are the new lawless wild west of data-harvesting apps. But they can also have a hugely distressing dark side, particularly for emotionally dependent people.

These apps are appearing daily.

In their haste to cash in, it seems like these rootin’-tootin’ app companies forgot to address their users’ privacy or publish even a smidgen of information about how these AI-powered large language models (LLMs) – marketed as soulmates for sale – work. We’re dealing with a whole ‘nother level of creepiness and potential privacy problems. With AI in the mix, we may even need more “dings” to address them all. Mozilla Privacy Foundation

AI invades and pervades companion apps

Surfshark found that many AI companionship apps that engage users in human-like interactions, offering emotional support, companionship, entertainment, and more, had a darker side. The eSafety Commissioner found they could:

- Influence political persuasions – how to vote.

- Change social norms and mores perceptions – how to act.

- Place complete trust in whatever the AI suggests.

- Unhealthy attitudes towards physical relationships.

- Can encourage taboo fetishes or behaviour.

- Exposes you to highly sensitive advertising

- Utilises gamification to provide you with additional features at an extra cost.

- Monetise by recommending that users buy certain products or services, including premium versions of their app.

- Form one-sided emotional bonds.

- Addictive dependency – users can enter a state of panic or fugue unless in regular contact with AI companions.

- Suicide if the app rejects or questions your reality.

- Induce users to reveal highly personal or sensitive information, typically by gradually increasing the intensity of small talk.

- Cybercriminals and Nation State Governments are definitely behind some apps.

Small start-ups operating AI companion services often lack minimum security standards, which has led to at least one serious security breach.

AI companion apps are a topic that will end in tears

AI companions becoming your closest friends is just plain scary. Already there are documented cases of extortion, sextortion, and blackmail with criminally linked, predator linked, geopolitically linked and politically linked undertones. Mozilla found that 90% of the popular apps sell your data! The Mozilla Privacy Foundation adds

All eleven AI companion chatbots we reviewed earned our ‘Privacy Not Included’ warning label, placing them on par with the worst categories of products we have ever reviewed for privacy.

Let’s get CHATGPT AI’s take on AI companion issues

If AI surpasses humans in providing companionship, there could be significant societal shifts.

- The increased reliance on AI for emotional support alters traditional human relationships, potentially leading to a decline in interpersonal skills.

- Ethical and psychological implications may arise, prompting us to question the value we place on authentic human interaction.

- Societal norms may evolve, leading to the creation of new social structures that integrate AI companions more deeply into our lives.

- This dependency may impact mental health, fostering technological reliance at the expense of genuine resilience in the real world.

- Ultimately, this could prompt a profound reevaluation of human connection and purpose, challenging us to understand what truly makes human relationships irreplaceable despite technological advances.

CyberShack’s view: AI companion apps are not inherently a bad thing. The potential for illicit use of your innermost thoughts is too great to ignore

We repeat, Privacy is the greatest threat to humanity. AI has the potential to rip every vestige of privacy from us and use it for profit.

Not one of the AI companion apps protects your privacy!

Read AI chatbots spy on you (Consumer Advice)

This is a very telling video; however, please disregard the commercial that appears around 6 minutes in.

Comments